A Functional History of Numbers (3 of 3)

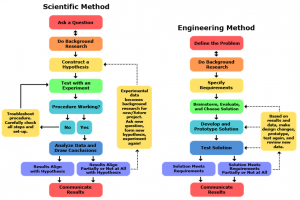

There are 3 kinds of science: the experimental, the theoretical, and the simulated1. Over the whole history of human discovery experimental scientific activities guided, and in many times surprised, the human imagination’s search to understand and control the observable universe. After sometime human knowledge seekers used the language of mathematics to create simplified, consistent theoretical models for the complex data and patterns they experimentally observe; abstractions were now put on paper. The third kind of scientific activity only appeared recently, about 75 years ago, when the first electronic computers were made; effectively creating the “human computational universe” and upgrading our scientific methods to a whole new level. The idea of this third kind of science is to computationally and visually investigate our theoretical mathematical models encoded as computer programs executed on various sets of inputs to get new patterns, ideas, and “virtual” discoveries that can be verified experimentally later or at least may provide grounds for new abstractions, theories, and practical applications. This third kind of science, the science and art of computer simulations, is now unavoidable in all scientific research and education activities. All this is made possible by using only the two numbers 1 and 0; a.k.a True and False.

After our journey with classic numbers in part one and geometric numbers in part two, in this final part of our functional history of numbers, we will take a look at a third kind of numbers: the computational numbers.

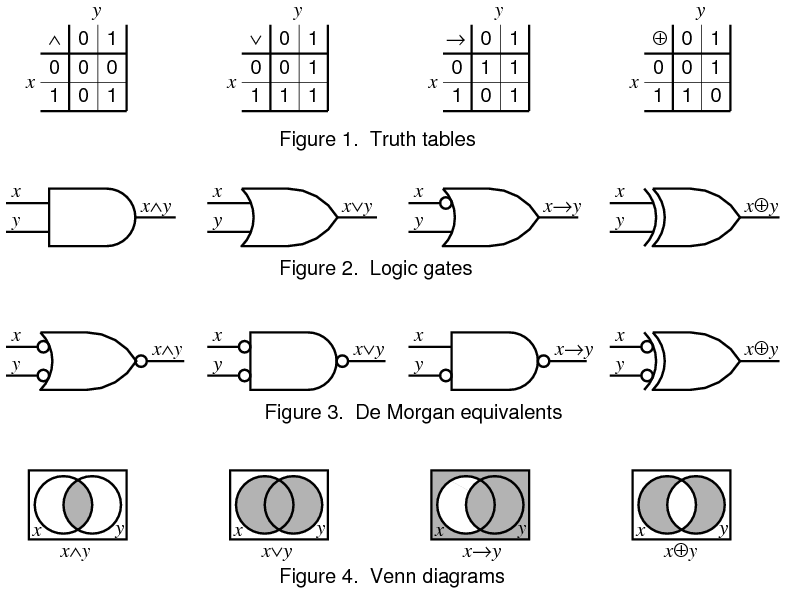

True or False: From Laws of Thought to Switching Algebra

George Boole, famous for his knowledge, kindness, and total lack of egoism was an English mathematician who died from bad cold, at the age of 49, resulting from walking from his house to his College under heavy rain and lecturing in wet clothes. Boole’s greatest achievement was to create an algebra of logic: Boolean algebra. Boole had minimal formal education due to the poverty of his family. His fellow students considered him to be something of a genius. His early training in languages2 had its share among the influences which led to the construction of Boole’s logic: he built his logic in the same way he felt languages were built3. Boole’s thinking may have been spurred on by a heated controversy raging at the time between two formidable academics working in this area4. They were the British mathematician and logician Augustus De Morgan and the Scottish philosopher and metaphysician Sir William Hamilton; the creator of quaternions. Boole came up with an ingenious formulation that synthesized the approaches of both De Morgan and Hamilton. Indeed De Morgan praised Boole’s work on logic saying:

Boole’s system of logic is but one of many proofs of genius and patience combined … That the symbolic processes of algebra, invented as tools of numerical calculation, should be competent to express every act of thought, and to furnish the grammar and dictionary of an all-containing system of logic, would not have been believed until it was proved5.

Boole discussed, in the Introduction to his book “The Mathematical Analysis of Logic”, the issue of the relevance of symbols in scientific expositions. His aim was probably to correct Hamilton’s opinion that the symbolization of mathematics could lead to the destruction of the reflective powers of students of that discipline. He contended that if symbols are used with full understanding of the laws which render them useful, “an intellectual discipline of high order is provided”. In 1854 Boole published his book “The Laws of Thought“. Boole’s goals were “to go under, over, and beyond” Aristotle’s logic by providing it with mathematical foundations involving equations; extending the class of problems it could treat from assessing validity to solving equations, and expanding the range of applications it could handle (e.g. from propositions having only two terms to those having arbitrarily many).

Boole’s work and that of later logicians initially appeared to have no engineering uses. Claude Shannon attended a philosophy class at the University of Michigan which introduced him to Boole’s studies. Shannon recognized that Boole’s work could form the basis of mechanisms and processes in the real world and that it was therefore highly relevant. In 1937 Shannon went on to write his master’s thesis:”A symbolic analysis of relay and switching circuits”, at the Massachusetts Institute of Technology, in which he showed how Boolean algebra could optimize the design of systems of electromechanical relays then used in telephone routing switches. He also proved that circuits with relays could solve Boolean algebra problems; a great milestone in the development of Switching Theory. Andrew Emerson in an article in The Guardian states:

That dissertation has since been hailed as one of the most significant master’s theses of the 20th century. To all intents and purposes, its use of binary code and Boolean algebra paved the way for the digital circuitry that is crucial to the operation of modern computers and telecommunications equipment.

Employing the properties of electrical switches to process logic is the basic concept that underlies all modern electronic digital computers. Victor Shestakov at Moscow State University proposed a theory of electric switches based on Boolean logic even earlier than Claude Shannon in 1935. But the first publication of Shestakov’s result took place only in 1941 in Russian6. Hence Boolean algebra became the foundation of practical digital circuit design; and Boole, via Shannon and Shestakov, provided the theoretical grounding for the Digital Age.

The Three of Balance

The reason behind the exclusive use of binary numbers in computing is purely physical as the electronic transistors of computers only have 2 stable states. All computer applications require testing conditions and taking actions accordingly through the ubiquitous If-Then statement. Life isn’t binary, though; you must occasionally say “I don’t know the answer” to be human. We actually need at least 3 states for our decision-making models: True, False, and Unknown. The symbol “NULL” in modern database management systems is one shining example of needing the unknown in modern computing.

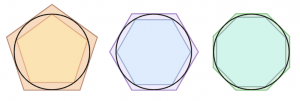

Mathematically, a ternary (i.e. base 3) number system is more efficient than a binary system in many ways; it offers the most economical way of representing integers among all number systems. A balanced ternary number system is based on the numbers (-1, 0, and 1) instead of (0, 1, and 2). This number system first appeared in the 18th century and was used for solving the Bachet’s Weights Problem attributed to Bachet in the early 17th century, but actually dating back all the way to Fibonacci or earlier7, as one of the first problems of integer partitions:

What is the least number of pound weights that can be used on a scale pan to weigh any integral number of pounds from 1 to 40 inclusive, if the weights can be placed in either of the scale pans?

The first true appearance of “pure” balanced ternary notation was in an article by Léon Lalanne in 1840, who was a designer of mechanical devices for arithmetic. Thomas Fowler independently invented and constructed a balanced ternary calculator at about the same time. The balanced ternary number system was mentioned only rarely for the next 100 years, until the development of the first electronic computers at the Moore School of Electrical Engineering in 1945–1946; at that time it was given serious consideration as a possible replacement for the decimal system. The complexity of arithmetic circuitry for balanced ternary arithmetic is not much greater than it is for the binary system, and a given number requires only 63% as many digit positions for its representation8. An implementation of this uncommonly used but beautiful symmetric number system in several computer languages can be found in this Rosetta Code article. A Demonstration of the weighting problem can be found in this Wolfram demonstration.

Perhaps someday ternary computers would replace our binary ones as described by Douglas W. Jones who wrote The Ternary Manifesto. Some researchers even began to suggest hardware implementations of this number system; for example, a system based on the Josephson junction, and another system based on a ternary optical computer architecture. Maybe we will see the “flip-flap-flop” in the near future after all.

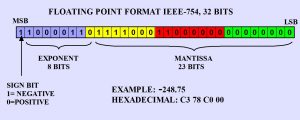

The Curious Case of Floating Points

All the geometric number systems we talked about earlier (the complex numbers, quaternions, vectors, and multivectors) can be represented inside a computer as data structures consisting of real number components. The problem is that most real numbers are impossible to accurately represent inside our computationally limited space, in time and storage, so we have to approximate. The floating-point numbers are the standard system for storing and manipulating approximations of real numbers in modern computers. The dedicated circuitry inside computers ensure fast floating-point based computations; some even rely on the GPU for accelerating parallel computations. As useful as they are, floating-point numbers have many bad mathematical properties:

- They are only a subset of rational numbers; all irrationals are impossible to represent as they have infinite decimals.

- Many common rational decimal numbers we intuitively use can only be represented in binary as recurring decimals. For example, the number (0.1) in decimal is (0.0 0011 0011 0011 0011 0011 …) in binary; leading also to an approximate representation for such simple decimals!

- Floating point numbers are not closed under any of the basic operations of addition, subtraction, multiplication or division; a numerical recipe for computational disaster under chaos theory!

- They don’t obey the important property of associativity: a+(b+c) is not always equal to (a+b)+c for floating point numbers a,b,c. The same problem is present in associativity of multiplication and distributivity of multiplication over addition.

- They are not uniformly distributed on the real number line. This is a serious problem for geometric modeling applications; applying a transformation on an object near the origin is different than applying the same transformation to the same object far from the origin.

- They have a limited range, accuracy, and precision for many practical applications.

- Two floating-point numbers in different format must be scaled before being added together. In other words, the binary points must be aligned before the addition can be performed resulting in possible loss of accuracy.

- Because of all of the above, equality and ordering tests are sometimes problematic; we must use tolerances and intervals instead of direct equality tests in many applications.

All these problems make direct implementation of mathematical models difficult for many applications. We need to add an additional “layer of protection” to ensure the model will work for an acceptable range of inputs just to avoid the bad effects of floating-point arithmetic9. There exist some approaches to reduce the bad effects of these numbers in computing like decimal floating-point, fixed-point arithmetic, and p-adic arithmetic. One other radically different solution is avoiding irrationals in our models from the start by only using rational numbers10; something that would definitely please the ancient Greeks. This approach requires us to create a library for “big integers” and base a rational numbers library on it. Another approach, common in financial applications, is to use very small units of measurement, like cents for example, and do all computations using integers; then do a final step of simple division when showing the results after accurately computing them. In any case, the floating-point binary number system will not go away anytime soon; we must take care when coding our geometric models not to allow these strange numbers to destroy the beauty of our geometry.

The Symbolic Meaning of Trees

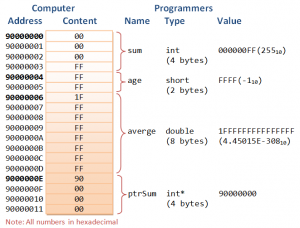

Binary integers are not just used for numerical calculations; their more important uses are for encoding symbols (using strings of characters) and for referencing memory locations (using pointers). Humans reason by symbols, they invent names for new entities on daily basis. Computers are required to store textual data and complex relations between symbolic entities to be useful; not just act as fast calculators. Strings and pointers are the bases for all other data structures that ever existed. Using sets of binary integers encoding symbols, their memory locations, and their semantic properties we have created arrays, stacks, queues, hash tables, heaps, trees, graphs, and geometrically optimized spatial data structures11. In the collective experience of computer scientists and software engineers, the most widely used data structures are trees of various kinds; think XML.

For our specific purpose of computational applications, there exists one type of software systems that relies completely on trees and their transformations: Symbolic Computation Systems a.k.a Computer Algebra Systems (CAS). The mathematical and logical base for modern CAS date back to George Boole’s method of separation of symbols, the contemporary name for a method which is a precursor of today’s abstract mathematical formalization12. Computer algebra can be defined as13:

Computer Algebra is a subject of science devoted to methods for solving mathematically formulated problems by symbolic algorithms, and to implementation of these algorithms in software and hardware. It is based on the exact finite representation of finite or infinite mathematical objects and structures, and allows for symbolic and abstract manipulation by a computer. Structural mathematical knowledge is used during the design as well as for verification and complexity analysis of the respective algorithms. Therefore computer algebra can be effectively employed for answering questions from various areas of computer science and mathematics, as well as natural sciences and engineering, provided they can be expressed in a mathematical model.

Although properly speaking, computer algebra should be a sub-field of scientific computing, they are generally considered as distinct fields because scientific computing is usually based on numerical computation with approximate floating point numbers, while symbolic computation emphasizes exact computation with expressions containing variables that have not any given value and are thus manipulated as symbols (therefore the name of symbolic computation).

Historically, computer algebra evolved out of two quite different sources: the requirements of theoretical physicists and research into classical symbolic artificial intelligence in the 60’s. Physics is one of the oldest application fields of computer algebra; in fact, many of the early computer algebra systems were designed or strongly influenced by physicists in order to suit their research needs, in particular, the need to handle highly complicated mathematical relations with parameters in an exact way. The other source for CAS development was symbolic AI, the dominant paradigm of AI research from the middle fifties until the late 1980s, that was motivated by the physical symbol system hypothesis, later challenged from many directions, stating: “a physical symbol system has the necessary and sufficient means for general intelligent action”. For example, Mathematica contains some form of an internal knowledge base and inference engine, the main components of an expert system symbolic AI, as part of its core components. In addition, many of the historical developments required for the normal construction and operation of modern CAS were developed out of symbolic AI research including timesharing, interactive interpreters, graphical user interfaces and the computer mouse, rapid development environments, the linked list data structure, automatic storage management, symbolic programming, functional programming, dynamic programming and object-oriented programming14.

As of today, the most popular commercial systems are Mathematica and Maple, which are commonly used by research mathematicians, scientists, and engineers. Freely available alternatives include Sage which can act as a front-end to several other free and nonfree CAS. Computer algebra systems have profoundly impacted scientific activities in many ways:

- Standard problems in high school mathematics can be solved by the push of a button nowadays; for example, see the Wolfram Demonstration Project online site.

- They enable to teach the more application-oriented material, which is usually more extensive but also more interesting, and there is more time to explore more general and conceptual aspects of mathematics. Both can considerably improve comprehension of mathematical methods, their potential, and their limitations, by non-mathematicians.

- The use of computer algebra systems has become a standard tool for experiments in advanced research that help to find, support, or refute conjectures. They have become absolutely indispensable in the design and update of tables of mathematical, physical or other scientific objects.

- The goals of computer algebra constituted a major challenge for the development and refinement of a wide range of computer science tools and methods, among them list processing, abstract data types, parametric polymorphism, constraint logic programming, parallel computing at various levels of granularity, dynamical memory management, user interfaces and interfaces between systems, mathematical knowledge representation and management.

This site is concerned with exploring the computational universe using geometric algebra. Naturally, the main utility for such exploration will definitely be based on a computer algebra system.

Arriving at an Exact Value, Give or Take

In science and engineering, data gathering is of prime importance. Our ability to measure has developed over the ages to remarkable levels of accuracy. Many of our computational algorithms are originally designed to deal explicitly with well defined exact numerical values. On the other hand, real data obtained by measurement, however accurate, are mere approximations of the actual value. Sometimes there is not even an exact value but just an acceptable range of values of some random variable. Repeatability, the main feature of scientific experimentation, is in many cases only possible within a “margin of error” or tolerance. To make things more complicated, there are many natural and computational systems that are very sensitive to small changes in their initial conditions and parameter variations; any small deviation from our theoretical computations will result in unpredictable behavior of the studied system; this is called chaos theory. Many solutions are used for this type of problem: probability theory, fuzzy logic, propagation of error, and interval arithmetic.

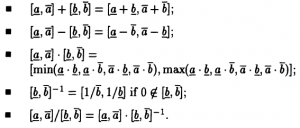

Interval arithmetic is a method developed by mathematicians since the 1950s and 1960s as an approach to putting bounds on rounding errors and measurement errors in mathematical computation and thus developing numerical methods that yield reliable results. Very simply put, it represents each value as a range of possibilities. For example, instead of estimating the height of someone using standard arithmetic as 2.0 meters, using interval arithmetic we might be certain that that person is somewhere between 1.97 and 2.03 meters. Whereas classical arithmetic defines operations on individual numbers, interval arithmetic defines a set of operations on intervals. Interval arithmetic is not a completely new phenomenon in mathematics; it has appeared several times under different names in the course of history. For example, Archimedes calculated lower and upper bounds 223/71 < π < 22/7 in the 3rd century BC.

At some point in time, research in interval computations was too optimistic; especially in applications related to global optimization15. But as research progressed many problems started to show. Most of these problems resulted from the bad semantics behind the basic rules of interval arithmetic. For example the operation of subtraction of intervals is not the reverse of addition, simple equations on intervals like A + X = [0, 0] and A X = [1, 1] have no interval solution. Another problem is interval dependency where traditional interval computation algorithms assume all occurrences of intervals to be independent so in an expression like x(1 + 2x) the two x’s are considered independent resulting in flawed outcomes. This means that most traditional floating point algorithms will never work correctly for intervals. Specific algorithms for solving the same problems will need to be developed specifically for intervals.

The more fundamental problem with intervals arises from the logical interpretation of intervals in practical applications. If we take the interval [a, b] for, say, a temperature satisfying some condition (predicate); what do we really mean by it:

- All values inside this interval satisfy the given condition.

- There exist one or more values inside this interval satisfying the given condition.

The difference may seem minor at first glance but it actually leads to different semantics in problems having many variables and many interactions between the variables. Realizing such problems researchers began to investigate other computational laws for intervals based on the actual semantics of their applications16. This serious problem with interval semantics is the main cause preventing the universal use of interval arithmetic in many computational problems, even though many software libraries already exist for handling traditional interval arithmetic, including the IEEE Std 1788-2015 standard. I suspect that no single number system can alone handle all kinds of inaccuracies in data and intermediate computations. We need to re-think our algebra and algorithms for each problem area to select the most suitable solutions.

A Functional Definition of Numbers

In our 3 part journey with numbers, we saw some of the functional roles they play in our modern “computation oriented” civilization. It’s very difficult to give a single universal definition of a number. The reason is that each branch of mathematics, physics, and engineering depends on different kinds of numbers with many interpretations of each. Nevertheless, we can approach a definition using the functional role number systems play in our continuous investigations. The general characteristics of numbers include:

- Numbers are abstract symbolic mathematical entities with basic operations of, at least, addition and multiplication; algebra is the art and science of manipulating numbers. Some number systems are closed under their operations and some are not (for example floating-point numbers).

- Numbers always result from finite or infinite processes (counting, partitioning, extending in dimension, geometric construction, digital manipulation etc.). We can only use infinite processes as conceptual abstractions while we can only implement our computational abstractions using finite processes.

- The same number or class of numbers can be found\utilized through many processes: geometric, algebraic, physical, measurement, limiting, computing etc. In this sense, numbers are the most fundamental of all abstractions serving a universal encoding and exchange of information medium between all parts of the human scientific activity.

Numbers are no more than abstract tools of the mind; they must be used wisely and creatively. Geometric algebra can be an excellent theoretical base for geometric modeling that encompasses, generalizes, and interprets complex, quaternion, and vector-based abstractions. On the algorithmic and implementation side, we need much more work because of the limited ability to use floating-point numbers for representing our abstract numerical patterns. We need to create new algorithms for our computational needs even for well-studied mathematical models. The blind conversion of abstractions into implementations result in all sorts of problems17. One very powerful interactive geometry software, Cinderella, avoided many of the problems other similar systems face not by increasing floating point accuracy or using interval arithmetic, but be intelligently using more advanced geometry-oriented algebraic structures on complex numbers; see this technical background for some details. This level of intelligence in software design requires creative use of our number systems usually unobtainable by studying traditional mathematics\engineering textbooks alone.

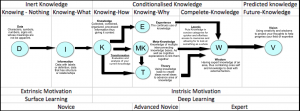

If we take a closer look at the famous DIKW Pyramid, we see that numbers are needed at every stage in our non-stop pursuit of wisdom. However, the effective use of numbers in such pursuit of wisdom requires much wisdom in its own right. We need deep knowledge about a problem we’re trying to solve in order to select the best numbers to represent our solution, and we need to use whatever numbers we see potentially useful to reach a deep understanding of our problem. In effect, using numbers in a scientific activity should be an iterative process of four steps applied again and again in the long run:

- Select one or several number systems for the problem.

- Represent your data and model with the selected number systems.

- Study your system using the numeric tools you’ve selected.

- Assess your findings and, perhaps, change some or all numbers you’ve worked with to more suitable number systems.

Some number system might be better at an early stage of data gathering, some other might be better at a later stage of analysis, yet another one should be used in the implementation stage on computers, while a totally different number system is best used for teaching concepts of the exact same problem. For each problem area, we need to be deeply aware of its application and computation requirements to select and use suitable number systems to arrive at our goals. Any other path will result in a large number of ad hoc trial and error based techniques that may never advance our understanding, both collective and individual, for our particular application area. I don’t believe that we are at the danger of arriving at an “artificial intelligence” that can perform such “deeply creative and artistic” methods of selecting and using numbers anytime soon, or perhaps never. I do believe that many of us have completely been converted into “mindless computational machines” blindly using a specific limited set of number systems that we were taught to use with no idea whatsoever about the universe of other number systems out of our immediate scope of narrow specialty; this is a clear and present danger to our human scientific side. I hope this site may widen the “numerical horizon” of some of us, including me, to the point of no return.

- Stephen Wolfram, “A New Kind of Science”. Wolfram Research 2002 ↩

- It’s worth noticing that 3 of our central mathematicians in this series were brilliant linguists: Hamilton, Grassmann, and Boole. See the studies here and here for the relation between mathematics and language ↩

- Luis M. Laita et al., “George Boole, a Forerunner of Symbolic Computation”. International Conference AISC 2000 Madrid, Spain, July 17–19, 2000 Revised Papers ↩

- Luis M. Laita, “Influences on Boole’s logic: The controversy between William Hamilton and Augustus De Morgan”. Annals of Science Volume 36, Issue 1, 1979 ↩

- George Boole 200, University College Cork, Online ↩

- Radomir S. Stankovic and Jaakko Astola, “From Boolean Logic to Switching Circuits and Automata – Towards Modern Information Technology”. Springer 2011 ↩

- Mohammad Bagheri, “Recreational Problems from Hasib Tabari’s Miftāh al-mu’āmalāt”. GANITA BHARATI Vol. 21, 1999. Online Version. ↩

- Donald E. Knuth, “The Art of Computer Programming Volume 2: Seminumerical Algorithms, 3rd Edition”. Addison-Wesley Professional 1997 ↩

- For real instances of disastrous software failure due to floating-point errors see section 3.10 “The Elephant in the Living Room” in Ronald T. Kneusel, “Numbers and Computers”. Springer 2015 ↩

- N.J. Wildberger, “Divine Proportions: Rational Trigonometry to Universal Geometry”. Wild Egg Books 2005 ↩

- Hanan Samet, “Foundations of Multidimensional and Metric Data Structures”. Morgan Kaufmann 2006 ↩

- Luis de Ledesma et al., “A Computational Approach to George Boole’s Discovery of Mathematical Logic”. Artificial Intelligence Volume 91 Issue 2, April 1997 ↩

- Johannes Grabmeier, Erich Kaltofen, Volker Weispfenning (Editors), “Computer Algebra Handbook – Foundations, Applications, Systems”. Springer 2003 ↩

- Stuart Russell, Peter Norvig, “Artificial Intelligence: A Modern Approach, 2nd Ed.”. Prentice Hall 2002 ↩

- Eldon Hansen, G. William Walster (Editors), “Global Optimization Using Interval Analysis: Revised And Expanded, 2nd Edition”. CRC Press 2003 ↩

- Sainz, M.A., Armengol, J., Calm, R., Herrero, P., Jorba, L., Vehi, J, “Modal Interval Analysis – New Tools for Numerical Information”. Springer 2014 ↩

- Joab Winkler, Mahesan Niranjan (Editors), “Uncertainty in Geometric Computations”. Springer 2002 ↩