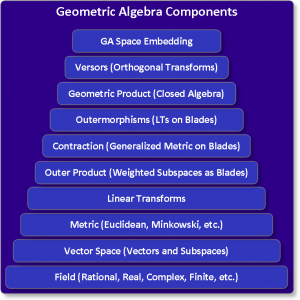

Elements of Geometric Algebra

Any useful mathematical structure consists of some integrating abstract elements. The mathematical structure of Geometric Algebra is sophisticated but very elegant and easy to understand. In my view as a software engineer, I could identify 10 main elements 1 of the mathematical structure of GA. Some of these elements are well studied mathematical disciplines in their own right. The integration of the 10 elements, however, produces a rich mathematical language capable of expressing much more than the mere sum of its parts. In this post, I will describe each component and talk a little about its significance and varieties without delving into any mathematical details. The interested reader can find many tutorials and links to explain in full details the mathematics of Geometric Algebra on my GA Online Resources page. The information in this post can be useful for someone starting to study GA and wanting a clear roadmap for understanding and relating its main concepts and algebraic tools.

Reaching Escape Velocity

It’s physically hard to leave the Earth into space. We need to construct a complex system with lots of engineering effort to overcome Earth’s gravity. It’s been conceptually harder for people at some times in history to imagine this is even possible, or that the Earth is actually round for that matter. As soon as we got out there, we could create a whole new world of concepts, possibilities, activities, and applications related to space exploration that affects our daily lives.

A similar situation exists in the way we understand and model our physical reality. We tend to be conceptually limited to think geometrically using 3D Euclidean space; even 2D for many people. This tendency is most apparent in our reliance for more than a century on 3D vector analysis with its limited mathematical structure and special-case algebraic operations of dot product and cross product extracted from Hamilton’s algebra of quaternions 2. To escape from this conceptual prison, we need to embrace 19th-century geometry 3 with its transcending dimension independent concepts and ideas. The problems we face while trying to understand and use this fascinating set of geometric ideas are analogous to difficulties we faced trying to reach the escape velocity of the Earth. We need a powerful mathematical structure to symbolically encode, manipulate, and explore such concepts and ideas freely. Geometric Algebra is the most powerful candidate for this difficult task. To understand the reason, we need to understand GA’s main elements and how they integrate to construct an algebraic language suitable for exploring these transcending geometric ideas better than any other single algebraic tool currently available.

1. The Field

At the base of geometric algebra structure, we find the scalars (i.e. numbers) used in representing and processing geometric quantities. We mostly work with rational, real, and complex scalars in our geometric models and applications. At the most abstract level, all three number systems have essential abstract properties in common, given the mathematical name of Fields. Many other, rather strange, mathematical fields (number systems) exist and can be the basis of constructing a geometric algebra for some specific application, such as finite fields, p-adic numbers, and hyperreal numbers. On the other extreme, in the lowest level of any geometric computing software implementation, concrete representations of numbers, like the notorious floating-points, are the practical data representation method. All geometric processing happens through primitive operations on numbers inside computers.

Historically, the first attempts to find an algebra to represent geometry resulted in trigonometry and analytic geometry. These two methods, still used today, essentially relate sets of numbers (representing coordinates, lengths, angles, areas, volumes, etc.) using many equations that can be useful in simple 2D and 3D geometric applications 4. For more involved or abstract geometric reasoning tasks, the equations become too complex to be useful even for geometrically simple curves and surfaces. Eventually, the need for representing geometry in higher dimensions led to the development of vector spaces.

2. The Vector Space

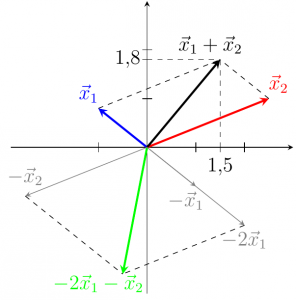

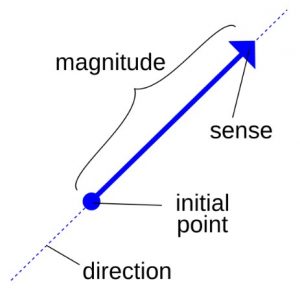

The second component in the mathematical structure of geometric algebra is a finite dimensional Vector Space over the selected field. Here the term “vector” is taken in its most abstract meaning as a mathematical entity satisfying specific properties. The main purpose of vector spaces is to study properties of sets of entities closed under Linear Combinations. Many objects in mathematics can be used as vectors in this abstract context including:

- Classical Euclidean vectors over real numbers (straight arrows having “length” and “direction” in space).

- Any field is actually a 1D vector space over itself.

- Coordinate spaces which are tuples of the same size along with addition and scalar multiplication are common representations of vector spaces.

- Matrices are vectors with additional structure.

- Polynomials of one or more variables.

To correctly understand Geometric Algebra we must first understand Vector Spaces. This can only be done from the Abstract Algebra point of view 5. not the coordinates-based Matrix Algebra point of view 6. It’s important for engineering and computer science students to be exposed to abstract algebra in their early study to free their minds of the numerical coordinate based representations and ascend to the level of abstraction necessary for Abstract Geometric Thinking 7. In summary, a vector is a symbolic abstraction different from the concrete tuple of coordinates that can represent it numerically within a given basis set.

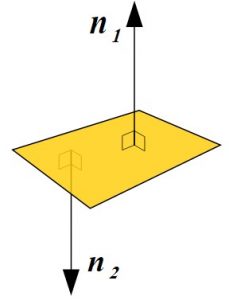

3. The Metric

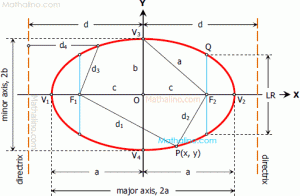

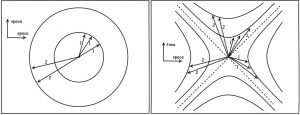

One of the simplest geometric figures we know about from early childhood is the circle. When we grow up a little, we are told that a circle is the set of all points having equal distance from a fixed point, its center. When we grow up more we discover that the concept of distance has many meanings. For example, if we study physics and read about relativity we find a very strange kind of distance defining what is called the Minkowski Space where two different points can have a distance equal to zero! Because the difference between two points (positions) is, geometrically, a vector (direction), we may have a non-zero vector with zero length in this strange space! Such kinds of distances are found all over physics, engineering, and computer science. The familiar Euclidean Space is just a special case in this collection.

Mathematically, we capture the concept of distance using Metrics and use it to define Metric Spaces having Metric Geometry. It’s not possible to model many important relations between points, vectors, and subspaces without first defining a metric. Properties and relations like distance, length, angles, orthogonality, etc. require a well-defined metric. By associating different metrics with the same vector space, we completely change the kinds of geometric concepts we can describe. This is why the metric we select for constructing a Geometric Algebra is very important in all following geometric interpretations of the space and its multivectors.

Applying the Geometric Generators pattern to different metrics results in very different sets of points. For example, using a point C and number r we can generate a circle by finding all other points having distance r from C. In Euclidean space this generates the familiar round set of points we call a circle. In other metrics, the result is quite different. The beauty of 19th-century geometry can only be viewed using such important mathematical concept, which is also a fundamental part of the construction of Geometric Algebra.

Algebraically, we can use the inner product, the dot product in Euclidean space, of vectors to represent the metric associated with their vector space. For the construction of Geometric Algebra, a better algebraic foundation of a metric is to use a symmetric bilinear form or the equivalent quadratic form.

4. Linear Transforms

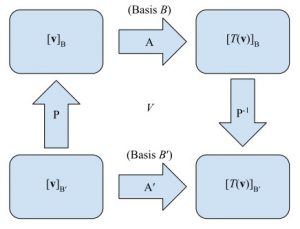

As engineers, we tend to perceive a Linear Transform as a matrix that when multiplied by a coordinate vector generates another. This is another case of mixing the representation with the concept. Just as vectors are abstractions, linear transforms are abstractions. We should understand that matrices are not the only, or even the best, representations for linear transforms in all cases.

This way of thinking explains why many properties of matrices, including square matrices, are invariant under change of basis linear transforms like rank, having an inverse, orthogonality of its row\column vectors, eigenvalues, determinant, etc. These properties are independent of the selected set of vector basis and are actually properties of the abstract linear transform the matrix represents. Some operations on matrices preserve such properties and can be used to study the linear transform, while other matrix operations are pure calculation without significant meaning with regard to the linear transformation the matrix represents. Studying Geometric Algebra requires this level of distinction especially when generalizing linear transformations from being applied to vectors to being applied to subspaces, i.e. outermophisms operating on GA blades.

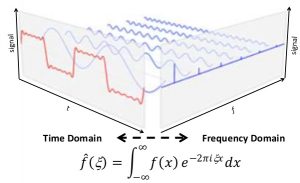

In engineering, the use of linear transformations is particularly important. Most engineers are taught to use and analyze linear systems at an early stage of their study. If we model a physical system as an abstract linear system, we can analyze the response of the linear system to a complicated input signal by analyzing the signal into simpler ones. Here we consider a signal as a vector that can be expressed as a linear combination of basis vectors (basis signals). Now using the composite linear transform the system performs we can compute the system output for each basis signal and apply the same linear combination of outputs to obtain the total system output due to the full input signal. This is called the Superposition Principle; a cornerstone in modern engineering.

One important use of linear transforms in geometric modeling is the representation of Projective Transformations (Homographies) between Projective Spaces. The study and use of Projective Geometry are fundamental to many applications in Computer Graphics, Computer Vision, Robotics, and Image Processing. Geometric Algebra is a perfect fit for expressing and developing ideas related to projective geometry as illustrated by the many articles relating GA to Projective Geometry both theoretically and practically.

If we use GA to model some practical problem, we probably need several GA spaces each representing one aspect of the problem. In this case, we need to define several linear transforms to switch between the GA spaces. As I will explain shortly, linear transforms on vectors can be automatically extended to subspaces using Outermorphisms. This way, any multivector can be transformed between GA spaces as we need.

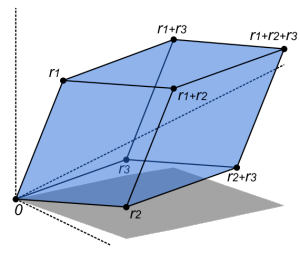

5. The Outer Product

Now we get to the new, and fun, elements of geometric algebra. A direction vector V in Euclidean space can be used to represent an infinite straight line passing through the origin O parallel to the vector V. By multiplying a scalar with our vector and adding the result to the origin point we can generate any point on this specific line. We can also take any point P on this line and find the vector W = P – O. Now V and W are linearly dependent because they are just scaled versions of each other. This construction is familiar to most of us, but the following generalization is not. From this kind of construction we can abstract some general properties of a vector:

- A vector is an algebraic representation of a one-dimensional subspace of the vector space (the line passing through the origin in our Euclidean space example).

- Many vectors can represent the same subspace; just by varying the scalar they are multiplied by we get a new algebraic representation. All these vector representations are linearly dependent.

- The main geometric properties of any vector are: its dimension (one), its attitude in space, and the scalar it’s multiplied with (its geometric length in the special case of Euclidean spaces)

- Vectors within the same subspace can be linearly combined to produce other vectors in the same subspace, they can also be compared, by a division, to extract their relative “lengths” or associated scalars.

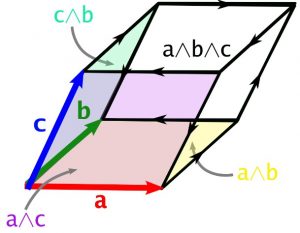

These 4 properties can be generalized to subspaces of higher dimension (2, 3, etc.) within the larger vector space. We can use new algebraic operation called the Outer Product, or the Exterior Product. Using the outer product of several vectors we get a new algebraic concept called the Blade. Blades come in grades depending on how many Linearly Independent (LID) vectors are multiplied using the outer product. We then have 0-Blades (simple scalars or numbers), 1-Blades (vectors), 2-Blades (bivectors or the outer product of two LID vectors), 3-Blades (trivectors), etc.

The general characteristics of blades follow a generalization of vectors with an additional structure provided by the outer product:

- A blade is an algebraic representation of a k-dimensional subspace of the vector space (the line, plane, volume, hyperplane passing through the origin in our Euclidean space example). Any vector having zero outer product with a blade is inside its subspace. This is called the Outer Product Null Space (OPNS) representation of subspaces.

- Many k-blades can represent the same k-dimensional subspace; just by varying the scalar they are multiplied by we get a new algebraic representation. All these blade representations are linearly dependent.

- The main geometric properties of any k-blade are: its dimension (k), its attitude in space, and the scalar it’s multiplied with (its geometric length, area, volume, etc. in the special case of Euclidean spaces).

- Blades representing the same subspace can be linearly combined to produce other blades 8 in the same subspace, they can also be compared, by a division, to extract their relative “weights” or associated scalars.

Many of the algebraic and geometric operations we are used to doing with vectors and numbers can be done more elegantly using blades. The most important benefit of using blades and the outer product is to algebraically capture the concept of linear independence. To find if a vector belongs to some subspace using classical matrix-based linear algebra, we need to solve a set of linear equations, This is essentially a complex algorithmic procedure, not a simple abstract algebraic operation that can be combined with other algebraic operations. Using a blade that represents our subspace, we can simply take the outer product with any vector and if the result is zero the vector belongs to the subspace. The more we reduce the need for “matrix manipulation algorithms” and use basic symbolic algebra instead, the more clear and unified our mathematical models become.

6. The Contraction

Vectors belonging to different 1-D subspaces in the Euclidean space can be compared and manipulated in several ways:

- We can find the “length” of a vector using its inner product with itself.

- We can find the “angle” between two vectors using their inner product and lengths.

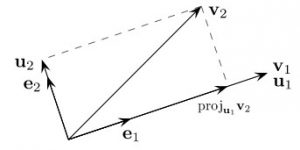

- We can project a vector on another also using the inner product.

- We can convert a set of LID vectors into orthogonal ones also using an algebraic process that depends mostly on the inner product.

It seems that the inner product is an essential operation in vector algebra. Extending vectors to blades require comparing and manipulating blades using a similar operation. Many generalizations for the inner product exit with varying algebraic properties and geometric significance.

The Left Contraction Product is one such algebraic generalization defined on blades with strong geometric significance. In Euclidean space, if we have an r-dimensional subspace represented by the r-blade A, and an s-dimensional subspace represented by the s-blade B, and r is less or equal to s, then the contraction of A on B represents a new (s-r)-blade (an (s-r)-dimensional subspace) obtained as follows:

- Project A’s subspace on B’s subspace, assume this gives a subspace represented by a blade P.

- Find the orthogonal complement of P’s subspace within B’s subspace. This orthogonal complement is represented by blade C computed algebraically from the left contraction of A on B.

We can summarize this process saying tat the left contraction of A on B finds the blade orthogonal to A’s projection within B. This fundamental operation on blades has many important mathematical and geometric properties that can simplify computing with subspaces. Instead of computing with the vectors that span a subspace, using matrix algorithms, we can now directly do all the following operations on subspaces of any dimension without the need for their spanning vectors, and without the need for matrix algebra:

- Find the “norm” (length, area, volume, etc.) of a subspace.

- Find the unit blade that represents a subspace.

- Find the “inverse” of a blade; the same blade divided by its squared norm.

- Project a subspace on another subspace.

- Find the angle between two same-dimension subspaces (assuming they intersect in a single line).

- Test the orthogonality of a vector to a whole subspace.

- Find the orthogonal complement of a subspace within a larger subspace.

- Generalize the notorious cross product to any dimension. The cross product of two vectors in 3D Euclidean space is geometrically equivalent to the orthogonal complement of the plane spanned by the two vectors.

- Indirectly represent a subspace with a blade as the set of all vectors having a zero contraction with the blade. This method is called the Inner Product Null Space (IPNS) representation of subspaces. We can convert between the IPNS and OPNS using a simple dualization algebraic operation.

In summary, using the outer product we create OPNS blades to directly represent subspaces. Using the contraction we convert between the OPNS blade and an IPNS blade representing the same subspace indirectly. Using both operations on blades we get a rich algebra for computing with subspaces of any dimension with any metric. Using the addition operation we can now define linear combinations of blades of different grades to construct Multivectors; a powerful and geometrically significant algebraic alternative to matrices and tensors. All linear operations on blades can be extended using linear combinations to act on multivectors. This beautiful mathematical structure is called the Grassmann-Cayley Algebra and is used extensively to study projective geometry; an important part in the full construction of Geometric Algebra.

7. Outermorphisms

As we generalized the metric, the inner product, to act on blades, we now generalize linear transforms on vectors to act on blades. An Outermorphism is a simple extension of a linear transform with many fascinating properties and implications:

- First, we begin with an abstract definition of a linear mapping f(.) between two vector spaces defined on the same scalar field. If the two vector spaces are the same, this mapping is called a linear operator or linear endomorphism. We may or may not use a matrix to define the linear mapping.

- Next, we extend the effect of the linear map to scalars by simply defining f(x) = x for all scalars x.

- Finally, we extend the linear map to act on blades by making it invariant to the outer product. Meaning that the transform of the outer product of some vectors equals the outer product of the transforms of the vectors.

Using this simple algebraic extension we can apply any linear transform to blades and multivectors. We can symbolically study in a coordinate-free manner the effects of linear transforms on subspaces, not just single vectors. We can numerically compute the outermorphisms of blades using sparse matrices. This can be very useful in many numerical linear algebra algorithms and certainly deserves much more attention than is currently given.

Using outermorphisms, many abstract concepts related to linear mappings are much easier to express and understand:

- Determinants are classically associated with square matrices, but they are actually more related to linear operators. For a linear operator, its determinant is the ratio of the effect of the linear operator on the space Pseudo-scalar blade relative to the Pseudo-scalar blade itself. The whole theory of determinants can be derived from outermorphisms much more elegantly and clearly without any use of matrices or coordinates.

- The adjoint linear operator is also classically associated with square matrices while being a more abstract and fundamental concept of linear operators. Outermorphisms also represent adjoint operators in a more natural way both symbolically and numerically.

- We can study many properties of the inverse of a linear operator using outermorpisms. We find, for example, that to apply the inverse operator to an IPNS blade we need a different algebraic treatment 9 than that in the case of an OPNS blade to get geometrically consistent results.

- We can use outermorphisms to represent a very important class of linear operators: the Orthogonal Transformations that preserve the metric of the vector space 10. Orthogonal transformations are typically represented using orthogonal matrices, but outermorphisms provide many useful and geometrically significant insights about their abstract coordinate-free properties.

With outermorphisms we have all the mathematical structure of Linear Transforms generalized to subspaces of any dimension. With the Outer Product, Contraction, and Outermorphisms we have a large toolset for computing with subspaces without the need for subspace decomposition or depending on matrices and coordinates.

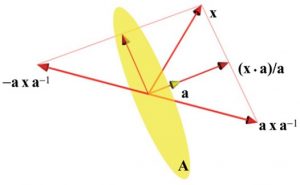

8. The Geometric Product

The final algebraic ingredient of the fascinating GA mathematical structure is the Geometric Product. The geometric product is the most general bilinear product in GA between arbitrary multivectors. The outer product, contraction, and all other bilinear products are special cases of the geometric product and can, in fact, be defined based on the geometric product alone. The full algebraic structure of GA can be stated axiomatically based on the geometric product and addition operations without using the other products. Personally, I don’t like this pure mathematics approach because it delays the geometric significance to a later stage. For so many years, Clifford Algebra was treated just like any other algebra in pure mathematics, and the powerful geometrically significant structure we are talking about here was hidden behind the cold symbols and pure algebraic relations.

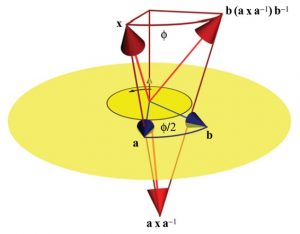

One important tool the geometric product provides is the ability to reflect a vector c in another vector n directly, or in the hyperplane n indirectly represents. This seemingly simple but very useful algebraic tool opens the door for the powerful versor representation for orthogonal linear operators we will talk about in the next section.

9. Versors

Versors constitute a very useful class of multivectors. A versor is the geometric product of several vectors, each having a non-zero inner product with itself. If the vectors are also orthogonal, the versor is actually a blade. Because of this, every versor has an inverse with respect to the geometric product. The geometric significance of these algebraic properties can be found by reading an important result of the

The geometric significance of these algebraic properties can be found by reading an important result of the Cartan–Dieudonné theorem:

Any Orthogonal Transformation is a composition of a series of reflections in homogeneous hyperplanes.

This theorem is the base of the Householder matrices in classical linear algebra for example. Because we can express reflections in hyperplanes using a simple geometric product of vectors, any orthogonal transformation in our GA can be expressed as a series of geometric products with vectors. This important algebraic tool is called the Versor Product.

Special kinds of versors exist having important practical applications. For example, a rotor in Euclidean GA is the geometric product of two unit vectors that is algebraically isomorphic to complex numbers (in the 2D GA case) or to quaternions (in the 3D GA case). Rotors are very useful and efficient tools for applying rotations to arbitrary subspaces with many properties better than rotation matrices and other related representations.

In fact, using the versor product all isometries of the base vector space can be algebraically represented using multivectors. In this way, multivectors represent both Geometric States (subspaces as blades) and metric-preserving Geometric Actions (orthogonal transforms as versors). We now have a very powerful, compact, and geometrically significant algebra ready for our final step: ascending to higher dimensions.

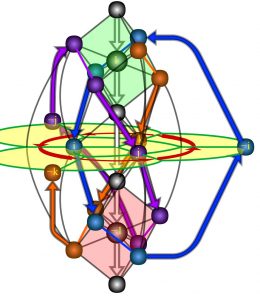

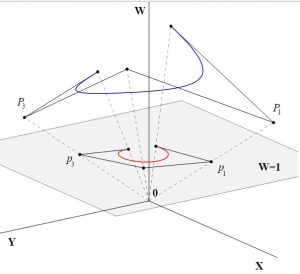

10. GA Space Embedding

The previous 9 elements of GA construct a set of algebraic tools to conceptually escape into higher dimensions. To understand the significance of this process, we can study a simpler example having a great practical impact in computer science and engineering: Homogeneous Coordinates and the related Homogeneous Transformation Matrices.

In Euclidean space, simple geometric concepts like points, general lines, and planes can’t be mathematically represented as elements of a linear vector space; they simply don’t satisfy the abstract axioms of vector spaces. In 1827, August Ferdinand Möbius introduced homogeneous coordinates, or projective coordinates, to solve this problem. By embedding our Euclidean space into a one-dimension-higher projective space, we could easily model additional geometric concepts as finite projective vectors like directions, points, points at infinity, and weighted points. This algebraic tool has greatly impacted many applications in engineering and computer science including robotics, computer graphics, computer vision, computer-aided design, and more.

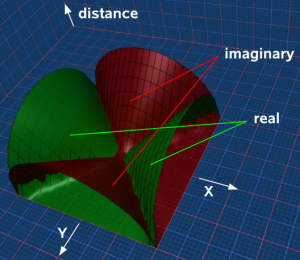

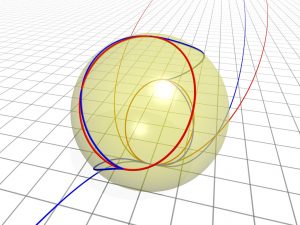

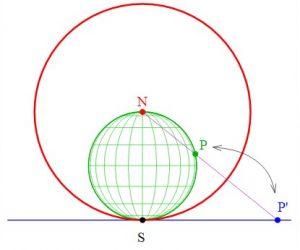

Using the powerful algebraic elements of Geometric Algebra we can exploit the idea of embedding a space inside a larger space to its fullest. Many geometric concepts can be “algebraically linearized” this way; meaning they can be completely represented using multivectors, instead of the commonly used combinatorial geometric representations. This transition in representation can have profound conceptual and computational effects on many important areas like computational and discrete geometry, for example. To get an idea about such geometric concepts, here is a list of some geometric states (objects) and actions (transformations) that can be represented by multivectors (GA blades and versors) in the 5D Conformal Geometric Algebra (CGA). The 5D CGA is the result of embedding our 3D Euclidean space into a 2-dimensions larger conformal space having a Minkowski metric:

- Round and Flat Geometric Primitives: Spheres and Planes in general positions in space – General Circles and Lines – Point Pairs resulting from intersecting a line and a sphere, for example – Points as zero-radius spheres. We can even have rounds with imaginary radius and lines and points at infinity useful for many intermediate geometric computations.

- Tangent Geometric Primitives:

- Similarity Transformations: Rotations, Translations, Uniform Scaling, Reflection in arbitrary Planes, and their compositions including Motors used extensively in representing Screw Motions.

- Special Kinds of Conformal Transformations: Most notably, reflections in spheres that can be composed into many useful transformations used, for example, to study Inversive Geometry.

All these geometric concepts and their incidence relations can be represented and studied with GA’s linear multivectors without any use of matrices, just by adding two more dimensions with a Minkowski metric to our 3D Euclidean space. Many more higher-dimension GA embeddings are currently studied 11 that can add more complex geometric objects and transformations to our list of typical geometric primitives. We are just at the beginning of a great journey of fascinating geometric explorations.

- There is a very important 11th element of Multivector Differentiation that leads to Geometric Calculus, I’m still trying to study this element, though. See this paper for a good introduction. ↩

- See “The vector algebra war: a historical perspective” ↩

- See for example Raymond O. Wells Jr’s paper “Key developments in geometry in the 19th Century” and Jeremy Gray’s book “Worlds Out of Nothing – A Course in the History of Geometry in the 19th Century” ↩

- For example, many trigonometric identities and relations can be found here, and analytic geometry definitions and formulas here and here ↩

- See for example Sheldon Axler’s book: “Linear Algebra Done Right” ↩

- See for example Carl D. Meyer’s book “Matrix analysis and applied linear algebra” ↩

- See this article about “The Development of Spatial and Geometric Thinking” and this chapter about “Geometric Thinking and Geometric Concepts” ↩

- To be accurate, a general linear combination of k-blades is not guaranteed to algebraically produce another k-blade, the set of all k-blades of some space is not a vector space by itself, a more general algebraic concept is the k-vector. The set of all k-vectors is, algebraically, a vector space that k-blades are some of its “linear” members. ↩

- Namely to use the inverse adjoint outermorphism rather than the original outermorphism itself. This is similar to transforming normal vectors with homogenous matrices where we need to use the inverse transpose matrix rather than the original matrix. ↩

- If V(.) is an orthogonal operator then its outermorphism is invariant with respect to both the outer product and the contraction. This has many significant implications for Geometric Algebra as we will see when talking about versors shortly. ↩

- See for example these papers here, here, here, and here. ↩